Generative AI and the Real World

Motivation & Inspiration

GenAI is constantly in the news and the rate of innovation is like nothing we have seen before. There is no doubt that it will change lots of things but somethings are more obvious than others. It’s great at building websites, writing blog posts, creating pictures and much more but what intrigues me is how do these capabilities help to solve real issues rather than just being “cool”.

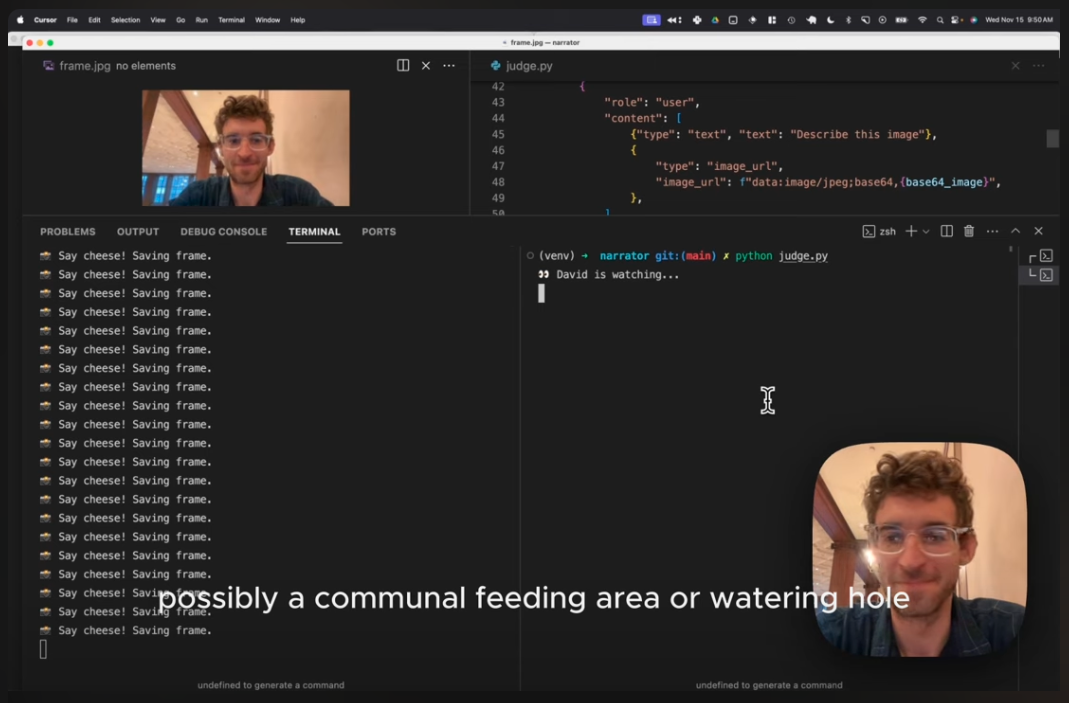

This project was inspired by another project called “Narrator” that has David Attenborough narrate what he sees from your webcam. Its cool little project that chains OpenAI and ElevenLabs to do something fun.

This project was inspired by another project called “Narrator” that has David Attenborough narrate what he sees from your webcam. Its cool little project that chains OpenAI and ElevenLabs to do something fun.

Check out Narrator here https://github.com/cbh123/narrator

As someone who only dabbles in coding for simple tasks, perhaps once a year, my familiarity with Python could best be described as basic. Every time I use it I effectively have to relearn it.

And so, the scene is set; can I use GenAI to both simplify creating the code and have it power something useful. This led to “What if it was mobile?”, “Could it help guide someone and help avoid danger?”

Project Scope

The aim of VisGuide is straightforward: to provide visual assistance to the partially sighted or blind using commodity hardware and GenAI. This initiative explores the potential of Generative AI to serve a practical, impactful purpose, reflecting a broader trend towards technology solutions that enhance quality of life for individuals with disabilities.

The Foundation of VisGuide

VisGuide’s hardware setup is simple, utilising a Raspberry Pi Zero with a camera and a power bank for mobility. Connectivity is achieved through a mobile phone hotspot, enabling the device to operate in diverse environments. The choice of hardware underscores the project’s commitment to accessibility and affordability.

The software, written in Python, is the core of VisGuide. It incorporates:

- Generative AI: Utilising OpenAI’s vision models, VisGuide interprets visual data to provide verbal descriptions of the user’s surroundings.

- ElevenLabs: The platform is used for voice synthesis, ensuring the verbal feedback is both clear, natural and that the text to speech process is as fast as possible.

- ChatGPT & Github CoPilot: The development process leveraged a combination of ChatGPT and Github CoPilot for code development. I found that both worked well but mainly used ChatGPT as the user experience was simple and when it didn’t do what I needed, I just changed my prompt to be more specific.

Working POC on GitHub

VisGuide works and you can access the project here. https://github.com/hirsts/visguide

VisGuide works and you can access the project here. https://github.com/hirsts/visguide

You can run it on your Mac laptop to test it and it runs on a Raspberry Pi Zero fine.

It is not yet optimised for speed and runs a little slow on the Raspberry Pi but works much quicker on the Mac.

Heres an example of the “guide” narration:

Modes and Use’s

VisGuide can be run in either continuous or on demand mode. On demand mode is where the user presses a button to capture the scene and have it narrated and continuous mode is where it runs in a loop every 5 seconds.

VisGuide has two primary use’s, the first is as a visual assistant or “guide” and the second is “tourist” mode where it describes the scene with colourful language to create a mental picture for the user.

- Guide Mode

This is intended to provide a brief and succinct description of the scene to help the user better navigate and avoid obstacles and risk. - Tourist Mode

This is where the user can participate in “sight seeing” and have a colourful and artistic narration of the general scene. Why shouldn’t partially sighted and or blind people be able to participate in “sight seeing”. This, combined with the users other senses, helps users to appreciate what their sighted peers can experience.

Impact and Potential

VisGuide is a working example of digital tools and services helping with a physical problem and explores how it can make a real difference. It explores offering increased independence for visually impaired users and showcases the potential of Generative AI to address and solve real-world challenges.

Whats Next?

Interactive Uses

It would be ideal to enable the user to “query” the scene. For example the user might ask “Where is the road crossing” or “Please guide me to the door”. This is where user input updates or augments the prompt to influence the narration response.Integrated Hardware

Wearing a Raspberry Pi around your neck with a battery pack is hardly ideal but great for development. Ideally, VisGuide would work with something like Meta’s Smart Glasses which have the camera and audio output built in and would provide a unobtrusive user experience.Local Processing

VisGuide currently requires internet access to leverage the SaaS LLM services. Mobile phones are incredibly powerful compute devices these days and so it would be ideal to have all processing performed locally and remove the dependency on connectivity and the internet however this would significantly increase the complexity.

Conclusion

VisGuide’s development journey illustrates the power of combining simple tools with cutting-edge AI to create solutions that have a real-world impact.

VisGuide’s development journey illustrates the power of combining simple tools with cutting-edge AI to create solutions that have a real-world impact.

As the project moves forward, it’s intended to be thought provoking and demonstrate the profound capabilities of technology when applied with purpose and vision.

This project is more than just a technological achievement; it’s a pathway to greater accessibility and independence for those it aims to serve.